Research

The overarching goal of our research is to integrate soft and compliant robot structures with novel learning and control algorithms to enable robots to safely and efficiently collaborate with humans and autonomously operate in challenging environments. Ongoing projects in the lab include aerial robotics, soft robotics, and human-robot interaction. Please check the summary of each project below and feel free to contact us if you have any questions or want to know more details!

We greatly acknowledge the National Science Foundation (NSF), Air Force Office of Scientific Research (AFOSR), Office of Naval Research (ONR), National Aeronautics and Space Administration (NASA), Science Foundation Arizona, Arizona Department of Health Services, Salt River Project, Northrop Grumman Cooperation, Honeywell Aerospace Technologies, and several internal funding sources, for supporting our past and current research.

|

|

Aerial Robotics

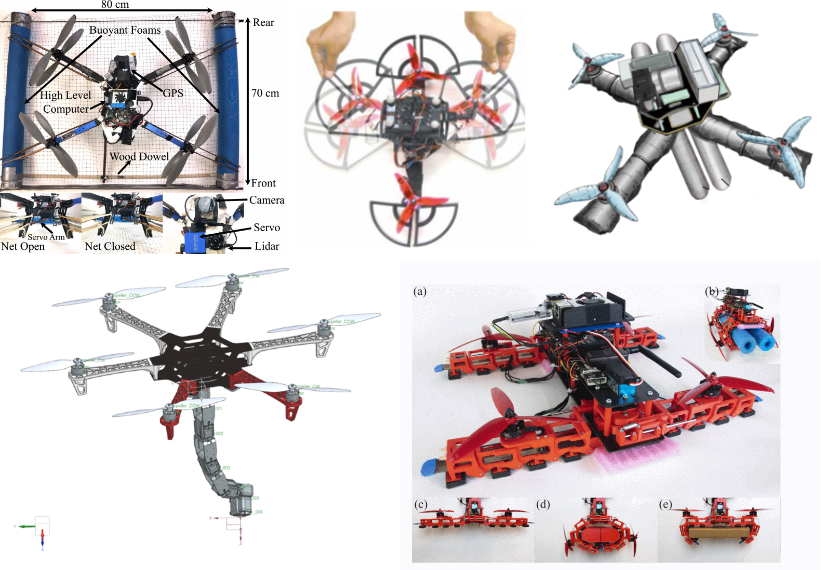

Unmanned aerial vehicles (UAVs) have been applied in aerial photography, surveillance, search and rescue, and precision agriculture. However, autonomous operations of small UAVs in dynamic environments pose challenges on the design of vehicle hardware and the embedded autonomy algorithms. Our research in this area includes (1) design of morphing UAVs, (2) dynamic modeling and precision control for the new hardware, and (3) aerial-physical interaction for navigation and manipulation.

> High-Fidelity Simulation of Aerial Robots

> High-Fidelity Simulation of Aerial Robots

Project Description

Make a simulation pipeline to rapidly test algorithms for aerial robots.

Integrate controllers using ROS2 into a simulation environment with Ardupilot and PX4 flight controllers.

Representative Publications

Nonlinear Wrench Observer of an Underactuated Aerial Manipulator

Multi-Objective Optimization for Quadrotor Multibody Dynamic Simulations

> Design and Control of Flexible Quadrotors

> Design and Control of Flexible Quadrotors

Project Description

To exploit the physical contact between the multi-rotor drone and its environment for better control and manipulation and higher safety.

Developing various compliant multi-rotor drones for passive resilience in contacts, detecting the contacts via different sensing methods. Modeling and simulation of contacts/collisions between drones and their physical environment.

Representative Publications

A Soft-Bodied Aerial Robot for Collision Resilience and Contact-Reactive Perching

Design, Characterization and Control of a Whole-body Grasping and Perching (WHOPPEr) Drone

> Contact Based Safe Navigation

> Contact Based Safe Navigation

Project Description

To exploit the physical contact between the multi-rotor drone and its environment for better control, motion planning and higher safety. Developing a safe planning and control algorithm for collision based efficient navigation. Building a simulator for RL based planning integrating contact model and recovery controller.

Representative Publications

Tactile-based Exploration, Mapping and Navigation with Collision-Resilient Aerial Vehicles

Soft Robotics

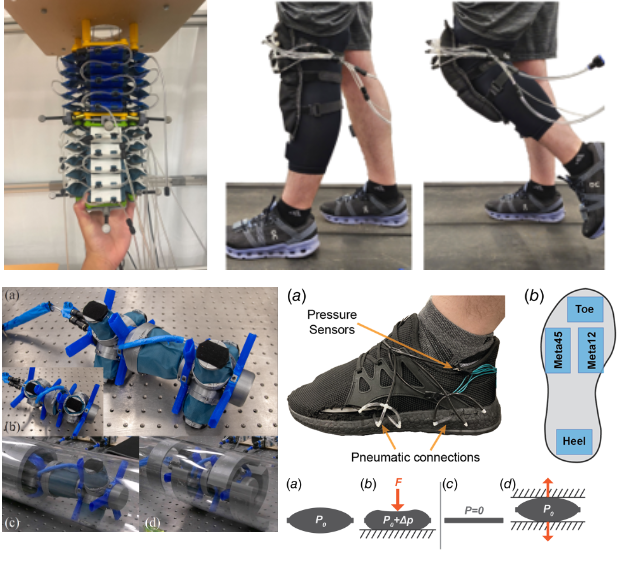

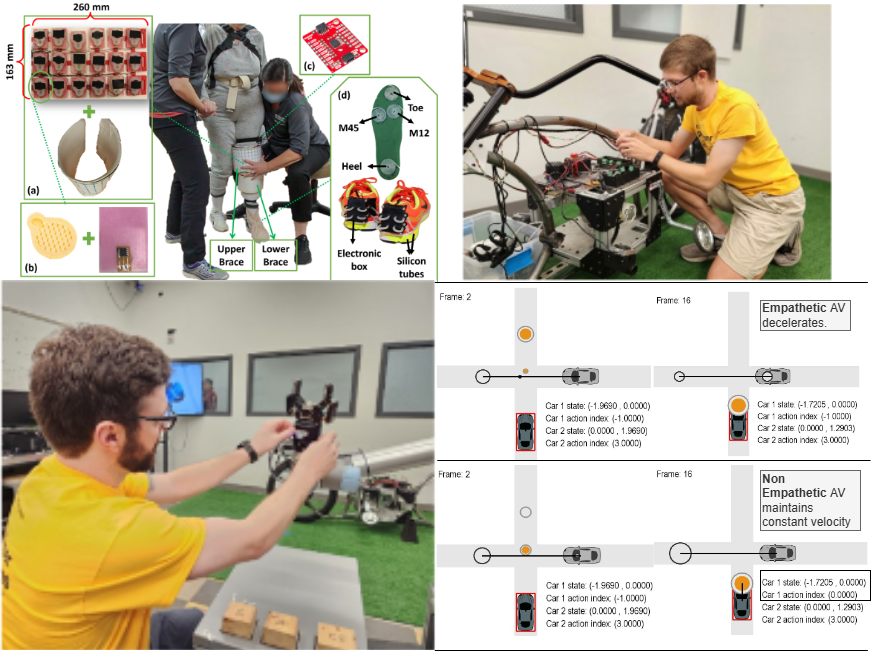

Soft robotics is reshaping the future of technology by developing flexible, adaptable systems that safely interact with humans and operate in complex environments. Current projects include soft robotic manipulators designed for advanced modeling and control, fabric-based exosuits for assistance and rehabilitation, and a soft pipe inspection robot capable of navigating intricate pipelines for health monitoring.

> Soft Robotics Arm

> Soft Robotics Arm

Project Description

Modeling and control of Soft Robotic Arm. Work on pneumatically operated soft robotic arm to test, train and implement models and develop control algorithms to achieve tasks including but not limited to trajectory tracking.

Representative Publications

Design and computational Modeling of fabric Soft pneumatic Actuators for Wearable Assistive Devices

> Soft Robotic Exosuits

> Soft Robotic Exosuits

Project Description

Design, characterize and test soft inflatable actuator based exosuit on healthy human subjects to evaluate the assistance provided by exosuit. Design and evaluate Soft Robotic exosuit powered by a new Inflatable Actuators and develop controls. To evaluate the effect of exosuit during flexion and extension, surface electromyography (sEMG) sensors are placed to record the muscle activity.

Representative Publications

Gait Sensing and Haptic Feedback Using an Inflatable Soft Haptic Sensor

A Kinematically Constrained Kalman Filter for Sensor Fusion in a Wearable Origami Robot

> Soft Pipe Inspection Robot

> Soft Pipe Inspection Robot

Project Descriptiont

This pipe inspection robot consists of several bistable inflatable fabric actuators, enabling it to navigate pipes of various sizes (4-6 inches in diameter) using inchworm locomotion. Designed to handle obstacles within the pipes. The large bistable actuator located in the center of the robot generates impact force, allowing it to push away or break through obstructions. The smaller bistable actuators at the head and tail can adapt to diameter changes in pipes. Improving bistable structure (materials, fabrication methods.etc) to make it more reliable and robust Control the robot to perform a jumping gait inside the pipe.

Representative Publications

Design and Gait Optimization of an In-Pipe Robot with Bistable Inflatable Fabric Actuators

Human-Robot Interaction

For the humans and robots to collaborate safely and efficiently, a robot needs to understand human intents, predict human actions, consider human factors, to optimize its own actions to complete a task with human safely, efficiently, and friendly. One major challenge is to model the human actions in highly dynamic tasks given the strong variability and uncertainty of humans. We have developed a game-theoretic framework to model the bilateral inference and decision-making process between the human and robot. Applications include autonomous vehicles, collaborative manufacturing, and wearable robots. For more details about how we apply the developed algorithms to autonomous vehicles, please check this page.

> Modeling Human Bias and Uncertainties in HRI

> Modeling Human Bias and Uncertainties in HRI

Project Description

Make AI better understand human preferences and decisions to make AI better able to assist. Integrate risk-aware cognitive models (CPT) into interactive AI planning in an Overcooked environment and robotic Smart Bike.

Representative Publications

> Bilateral Reasoning and Learning in HRI

> Bilateral Reasoning and Learning in HRI

Project Description

Developing a game theoretical based controller for physical human-robot interaction scenarios such as controlling assistive wearable robots. We are aiming to integrate incomplete information games with optimal control and reinforcement learning to infer the human intent during HRI tasks and also model the possible learning process of the human while interacting with the robot.

Representative Publications

Bounded Rational Game-theoretical Modeling of Human Joint Actions with Incomplete Information.